|

||||||||||||||||||||||||||

Hill Climbing - Python Automation and Machine Learning for ICs - - An Online Book - |

||||||||||||||||||||||||||

| Python Automation and Machine Learning for ICs http://www.globalsino.com/ICs/ | ||||||||||||||||||||||||||

| Chapter/Index: Introduction | A | B | C | D | E | F | G | H | I | J | K | L | M | N | O | P | Q | R | S | T | U | V | W | X | Y | Z | Appendix | ||||||||||||||||||||||||||

================================================================================= Hill climbing is a local search algorithm that starts with an arbitrary solution to a problem and iteratively makes small changes to it, moving towards a higher-value solution. The goal is to find the peak of the solution space, which represents the optimal solution according to the evaluation function. There are different variations of hill climbing, such as steepest ascent hill climbing, where the algorithm always chooses the neighbor with the highest value, and steepest descent hill climbing, where it chooses the neighbor with the lowest value. While hill climbing is a simple and intuitive optimization algorithm, it may get stuck in local optima and fail to find the global optimum. To overcome this limitation, variations like simulated annealing and genetic algorithms are often used. The basic steps of hill climbing algorithms are:

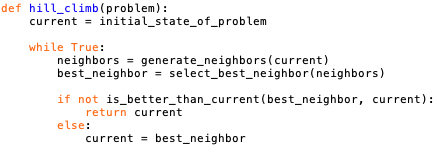

Choose the neighbor with the highest value (or the lowest, depending on the optimization problem). This is the candidate state for the next iteration. The algorithm continues until a stopping condition is met. This condition could be a maximum number of iterations, reaching a predefined solution quality, or other criteria specific to the problem being solved. However, note that hill climbing is a local search algorithm, and it may get stuck in local optima. Strategies like random restarts, simulated annealing, or genetic algorithms are often used to enhance its effectiveness in finding better solutions. A Python-like pseudocode representation is code(for instance, it is used at code): Table 3596 lists some different variations of hill climbing algorithms. These variations address specific challenges or limitations of basic hill climbing and can be adapted based on the characteristics of the optimization problem at hand. The choice of which variation to use often depends on the nature of the problem and the desired trade-offs between exploration and exploitation. Table 3596. Different variations of hill climbing algorithms.

============================================

|

||||||||||||||||||||||||||

| ================================================================================= | ||||||||||||||||||||||||||

|

|

||||||||||||||||||||||||||