Hypothesis (Predicted Output (h(x))) - Python for Integrated Circuits - - An Online Book - |

|||||||||||||||||||||

| Python for Integrated Circuits http://www.globalsino.com/ICs/ | |||||||||||||||||||||

| Chapter/Index: Introduction | A | B | C | D | E | F | G | H | I | J | K | L | M | N | O | P | Q | R | S | T | U | V | W | X | Y | Z | Appendix | |||||||||||||||||||||

================================================================================= In machine learning, a hypothesis refers to a specific model or function that you believe can approximate the underlying relationship between input data and output predictions. It's a fundamental concept in supervised learning, where you have a dataset with input features and corresponding target values, and your goal is to learn a mapping from inputs to outputs. The hypothesis is typically represented as a mathematical function, and the choice of this function depends on the type of machine learning algorithm you are using. Here are a few common samples: Linear Regression: In linear regression, the hypothesis is a linear function that relates the input features to the target variable. It can be represented as: h(x) = θ0 + θ1 * x1 + θ2 * x2 + ... + θn * xn ------------------------------- [3909a]

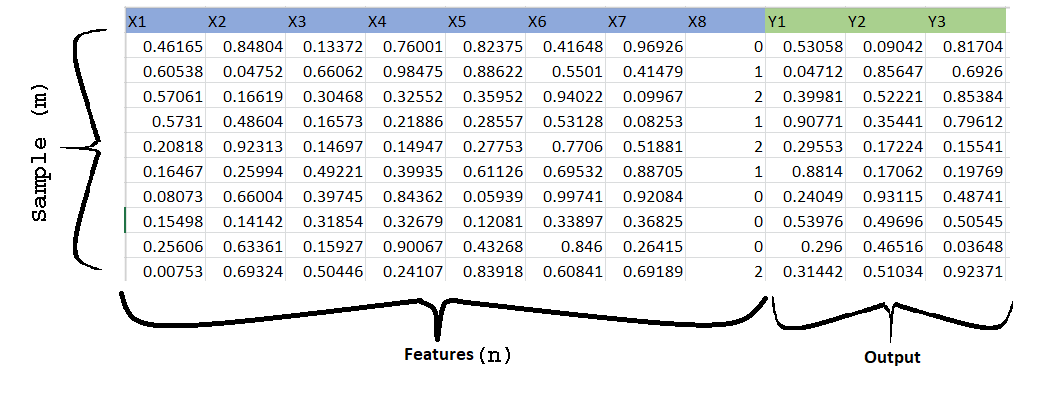

Logistic Regression: In logistic regression, the hypothesis is used for binary classification problems and is based on the logistic function. It looks like this: h(x) = 1 / (1 + e^(-z)) ------------------------------- [3909b] Here, z is a linear combination of input features, and h(x) represents the probability that a given input belongs to the positive class. Neural Networks: In deep learning, the hypothesis is represented by a neural network architecture. A neural network consists of multiple interconnected layers of nodes (neurons), and the hypothesis is the overall function computed by the network. h(x) = f(W2 * f(W1 * x + b1) + b2) ------------------------------- [3909c] Here, h(x) is the output of the neural network, W1, W2, b1, b2 are the weights and biases of the network's layers, and f represents the activation function. The process of machine learning involves finding the best possible values for the parameters (e.g., θ in linear regression or weights in a neural network) of the hypothesis function so that it can make accurate predictions on new, unseen data. This is typically done through optimization techniques, such as gradient descent, which minimize the difference between the predicted values and the actual target values, by choising correct parameters (θ), in the training dataset. Figure 3909a shows how supervised learning works. To provide a precise characterization of the supervised learning problem, the objective is to acquire a function h: X → Y from a given training set. This function, denoted as h(x), should excel at predicting the associated value y. Traditionally, this function h is referred to as a "hypothesis" due to historical conventions.

Figure 3909a. Workflow of supervised learning. In machine learning, hypothesis representation is a fundamental concept, especially in supervised learning tasks like regression and classification. The hypothesis is essentially the model's way of making predictions or approximating the relationship between input data and the target variable. It is typically represented as a mathematical function or a set of parameters that map input features to output predictions. Whether the hypothesis obtained from a machine learning process is a random variable or not depends on various factors, and it's not only determined by whether the input data is a random variable. Table 3909a list the randomness of hypothesis depending on learning algorithms and input data in the learning process of "data > learning algorithm > hypothesis". Table 3909a. Randomness of hypothesis depending on learning algorithms and input data.

Here are two common samples of hypothesis representation: Linear Regression: In linear regression, the hypothesis represents a linear relationship between input features and the target variable. The hypothesis function is defined as: Here,

The goal of linear regression is to find the values of that minimize the difference between the predictions and the actual target values in the training data. When you have multiple training samples (also known as a dataset with multiple data points), the equations for the hypothesis and the cost function change to accommodate the entire dataset. This is often referred to as "batch" gradient descent, where you update the model parameters using the average of the gradients computed across all training samples.

Figure 3909b. Multiple training samples, features, and outputs in csv format. Hypothesis (for multiple training samples): The hypothesis for linear regression with multiple training samples is represented as a matrix multiplication. Let be the number of training samples, be the number of features, be the feature matrix, and be the target values. The hypothesis can be expressed as: where,

Cost Function (for multiple training samples): The cost function in linear regression is typically represented using the mean squared error (MSE) for multiple training samples. The cost function is defined as: where,

Gradient Descent (for updating ): To train the linear regression model, you typically use gradient descent to minimize the cost function. The update rule for � in each iteration of gradient descent is as follows: where,

In each iteration, each parameter is updated simultaneously using the gradients calculated over the entire training dataset. This process is repeated until the cost function converges to a minimum. This batch gradient descent process allows you to find the optimal parameters that minimize the cost function, making your linear regression model fit the training data as closely as possible. Logistic Regression: In logistic regression, the hypothesis represents the probability that a given input belongs to a particular class. The hypothesis function is defined as the sigmoid (logistic) function: Here,

Logistic regression is commonly used for binary classification tasks, where the goal is to classify data points into one of two classes based on the probability predicted by the hypothesis. In both samples, the hypothesis is represented as a mathematical function involving model parameters (�) and input features (�). The machine learning algorithm's task is to learn the optimal values of these parameters during training so that the hypothesis can make accurate predictions on new, unseen data. The choice of hypothesis representation can vary depending on the specific machine learning algorithm and problem at hand. More complex models, such as neural networks, use more intricate hypothesis representations involving multiple layers of transformations and activation functions. However, the basic idea remains the same: the hypothesis represents how the model maps inputs to outputs. The process, where the goal is to minimize the difference between the predicted output (hypothesis(x)) and the actual output (y), is known as "training" or "model training" in machine learning. The difference can be given by (h(x)-y)2. In supervised learning, this is a fundamental step where the machine learning algorithm adjusts its parameters to make the predictions as close as possible to the true target values in the training dataset. The process of minimizing the difference between the hypothesis and the actual target is typically achieved through various optimization techniques, such as gradient descent, which iteratively updates the model's parameters to reduce the prediction error. The objective is to find the set of parameters that results in the best possible fit of the model to the training data, allowing it to generalize well to new, unseen data. ============================================

|

|||||||||||||||||||||

| ================================================================================= | |||||||||||||||||||||

|

|

|||||||||||||||||||||