=================================================================================

In data science and machine learning, an Application Programming Interface (API) refers to a set of rules and protocols for building and interacting with software applications. APIs play a crucial role by allowing different software systems to communicate with each other, facilitating the integration and functionality of different machine learning models and data science tools. Here's how APIs are commonly used in these fields: - Data Access: APIs are often used to retrieve data from remote sources, which is essential for data science projects that depend on real-time or dynamic data. For example, APIs can be used to access datasets from government databases, social media platforms, or financial markets.

- Model Deployment: Once a machine learning model is trained, it can be deployed through an API, making it accessible to other software systems. This allows external systems to input new data and receive predictions or analyses from the model. This is particularly useful for integrating machine learning models into existing production environments without having to rebuild or significantly alter the existing infrastructure.

- Automation of Workflows: APIs can automate workflows by enabling the seamless transfer of data between different tools and platforms used in data science workflows, such as data cleaning tools, statistical analysis packages, and visualization tools.

- Collaboration and Sharing: APIs facilitate collaboration across different teams and organizations by standardizing how systems interact. They allow developers to build on each other's work without needing to share the entire codebase, preserving intellectual property while encouraging innovation.

- Scalability and Maintenance: APIs help in scaling applications by providing a modular architecture where different components can be updated independently. In machine learning, this means that a model can be improved or replaced without altering other parts of the system.

For example, popular machine learning platforms like TensorFlow, PyTorch, and Scikit-Learn provide APIs that allow users to access powerful computational tools and algorithms through relatively simple commands. These APIs abstract away much of the complexity involved in programming these functionalities from scratch. TensorFlow, PyTorch, and Scikit-Learn each provide distinct APIs tailored to their respective ecosystems, supporting a variety of tasks in machine learning and deep learning. The APIs offered by each of these popular frameworks are: - TensorFlow: TensorFlow, developed by Google, is a framework designed for both deep learning and traditional machine learning. Its API is highly flexible and is used for tasks ranging from developing complex neural networks to performing standard machine learning. Key features of the TensorFlow API include:

- High-level APIs such as Keras for building and training deep learning models using straightforward, modular building blocks.

- Low-level APIs for fine control over model architecture and training processes, which are useful for researchers and developers needing to create custom operations.

- Data API for building efficient data input pipelines, which can preprocess large amounts of data and augment datasets dynamically.

- Distribution Strategy API for training models on different hardware configurations, including multi-GPU and distributed settings, facilitating scalability and speed.

- TensorBoard for visualization, which helps in monitoring the training process by logging metrics like loss and accuracy, as well as visualizing model graphs

- PyTorch:

Developed by Facebook, PyTorch is favored particularly in the academic and research communities due to its ease of use and dynamic computational graphing capabilities. Its API features include:

- Torch API which provides a wide range of tools for tensors and mathematical operations.

- nn.Module, which is a part of torch.nn that provides building blocks for neural networks, like layers, activation functions, and loss functions.

- DataLoader and Dataset APIs, part of torch.utils.data, for handling and batching data efficiently, crucial for training models effectively.

- TorchScript for converting PyTorch models into a format that can be run in a high-performance environment independent of Python.

- Autograd Module, which automatically handles differentiation, an essential component for training neural networks.

- Scikit-Learn:

Scikit-Learn is particularly popular for traditional machine learning and comes with a simple, consistent API that is ideal for newcomers and for deploying models quickly. Key API features include:

- Estimator API, which is used across all learning algorithms in Scikit-Learn, ensuring consistency and simplicity. It standardizes the functions for building models, fitting them to data, and making predictions.

- Transformers and Preprocessors for data scaling, normalization, and transformation, which are crucial for preparing data for training.

- Pipeline API which helps in chaining preprocessors and estimators into a single coherent workflow, simplifying the process of coding and reducing the chance of errors.

- Model Evaluation Tools which provide metrics, cross-validation, and other utilities to evaluate and compare the performance of algorithms.

Each of these frameworks offers comprehensive APIs that cater to different needs within the machine learning lifecycle, from data preprocessing and model building to training, evaluating, and deploying models.

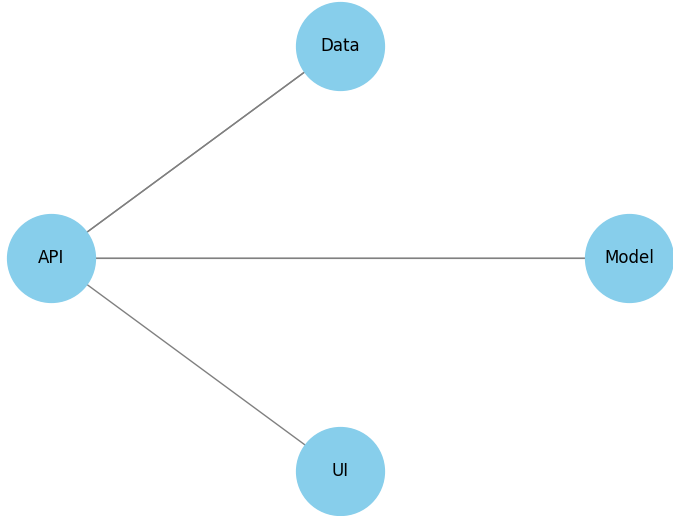

Figure 4506 shows the API structure in machine learning. The diagram shows a typical setup where an API interacts with various components like a user interface, a machine learning model, and a data storage system. The "User Interface" communicates with the "API". The "API" sends requests to and receives responses from both the "ML Model" and "Data Storage". The "ML Model" and "Data Storage" both interface directly with the API, illustrating how data flows in and out of the API to these components.

Figure 4506. API structure in machine learning (code).

In a broad sense, all Python libraries can be considered APIs because they provide a set of functions, classes, and modules that you can call from your code. These libraries offer an "interface" to programming functionalities that allow you to build more complex programs without needing to handle the lower-level details.

============================================

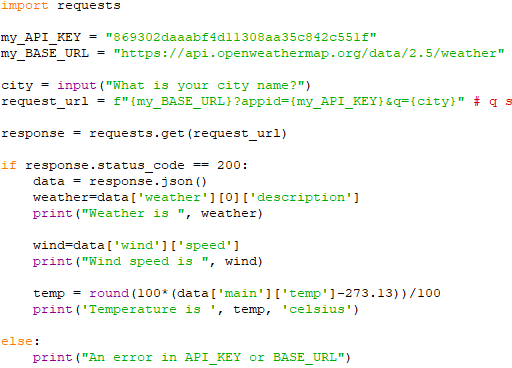

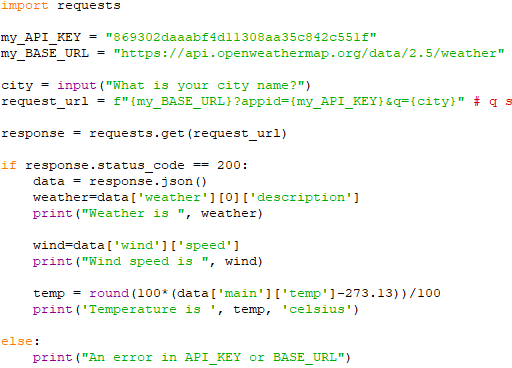

API (Application Programming Interface) to extract weather of a city: code:

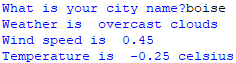

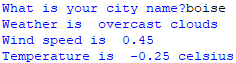

Output:

|