|

||||||||

Mixture of Gaussians (MoG) - Python Automation and Machine Learning for ICs - - An Online Book - |

||||||||

| Python Automation and Machine Learning for ICs http://www.globalsino.com/ICs/ | ||||||||

| Chapter/Index: Introduction | A | B | C | D | E | F | G | H | I | J | K | L | M | N | O | P | Q | R | S | T | U | V | W | X | Y | Z | Appendix | ||||||||

================================================================================= A Mixture of Gaussians (MoG) is a probabilistic model that represents a mixture distribution, which is a combination of multiple Gaussian (normal) distributions. In machine learning, particularly unsupervised learning, the Mixture of Gaussians model is often used for modeling complex probability distributions:

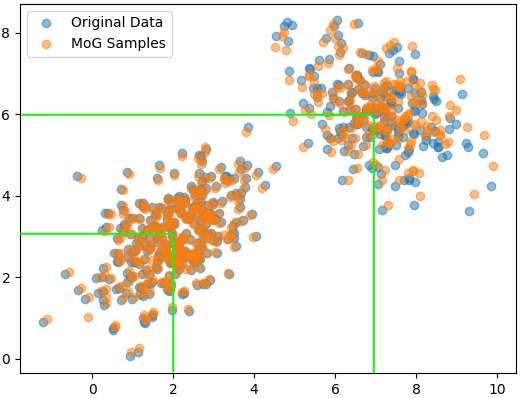

where: The joint probability is the product of the conditional probability of the data given the latent variable and the probability distribution of the latent variable, given by, where,: x(i)|z(i)) ~ N (, Σi) ---------------------------- [3698c] Figure 3698a shows Mixture of Gaussians (MoG) in one-dimension (1D) and two-dimension (2D). In the 1D case of the first Gaussian, the mean is 2, and for the second Gaussian (orange cluster), the mean is 7. There is no second value in the mean parameters for these one-dimensional distributions. With a two-dimensional (2D) Gaussian distribution (multivariate Gaussian), then we would specify a mean vector with two components (one for each dimension). Figure 3698a (b) shows the distinction between the blue and orange clusters which is intentionally created by using two different Gaussian distributions with distinct mean and covariance matrix parameters. The key idea behind a MoG model is that the observed data is assumed to be a mixture of multiple Gaussian distributions. Each Gaussian distribution represents a "component" of the mixture, and the overall distribution is a weighted sum of these components:

When the Mixture of Gaussians model is fitted to the combined synthetic data, it will attempt to learn the parameters (means, covariances, and weights) of two Gaussian components, effectively capturing the structure of the original data composed of two clusters.

(a)

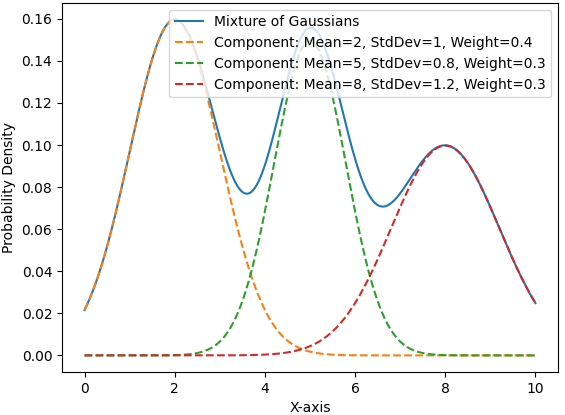

(b) Figure 3698b shows a 1D plot of a Mixture of Gaussians, which calculates the probability density function (PDF) of the Mixture of Gaussians. The main function defines parameters for three Gaussian components, generates data points along the x-axis, and then plots both the overall Mixture of Gaussians and the individual Gaussian components.

============================================

|

||||||||

| ================================================================================= | ||||||||

|

|

||||||||